This post will focus on installing a vm on VMWare ESXi 5.1 using an nfs share. The nfs share will exist on an archlinux installation. The VMWare website offers some best practices for using NFS available

here. Unfortunately, the setup that is being performed is on consumer grade hardware (i.e. laptops that were available), so most of the best practices will have to be skipped. For test purposes, this will be considered acceptable.

Step 1:

Install nfs server on a local machine. The nfs server will exist on an archlinux machine. Archlinux uses pacman to install packages and systemd.

pacman -S nfs-utils

systemctl start rpc-idmapd

systemctl start rpc-mountd

Step 2:

Open some ports. This machine uses a separate chain called "open" for open ports and services.

iptables -A open -p tcp --dport 111 -j ACCEPT

iptables -A open -p tcp --dport 2049 -j ACCEPT

iptables -A open -p tcp --dport 20048 -j ACCEPT

Step 3:

Make an nfs directory that will be exported, add to the exports file, re-export the fs.

mkdir /srv/nfs

echo "/srv/nfs 10.0.0.1(rw,no_root_squash,sync)" >> /etc/exports

exportfs -rav

Step 4:

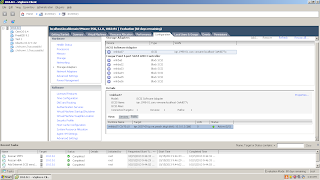

In the vsphere client, click on the esxi host, then click on the "Configuration" tab, then the "Storage" option under "Hardware". Click on "Add Storage..." and choose "Network File System". Set up the nfs share as shown below.

Step 5:

Install CentOS 6.4 on the nfs share. Right click the esxi host in the

vsphere client, and choose "New Virtual Machine". On the first screen,

choose "Custom". On the storage screen, choose the newly created nfs share.

As before, on the networking screen, use the "Managment" and

"Production" port groups for the two interfaces.

As before, edit the boot settings.

And attach the CentOS iso from the host machine.

CentOS can now be installed and configured similarly to the previous installation. The management ip will be 192.168.1.11 and the production ip will be 172.16.0.11

Networking on the esxi host should now look like this.

Step 6:

Verify the setup. The hosts should be able to communicate with each other on the same vlans, but not between.

Taking down the production nic (172.16.0.10) on the vm on the esxi local disk should prevent the node from reaching the nfs host on the same vlan, or 172.16.0.11. Although the vm still has a route to the nfs host through the default route of 192.168.1.1, it should still not be able to get to the 172.16.0.x network through the default route. The hosts appear to be isolated as a ping to 172.16.0.11 fails.

Upon closer inspection, the packets are still being routed between vlans, but the target vm does not have a route to the 192.168.1.x network out of its 172.16.0.11 interface, so it is just not responding. Using tcpdump on the local host doing the routing provides insight (traffic on the 192.168.1.x network should not be seen on vlan 100).

tcpdump -nni eth0.100

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0.100, link-type EN10MB (Ethernet), capture size 65535 bytes

03:36:01.036035 IP 192.168.1.10 > 172.16.0.11: ICMP echo request, id 63236, seq 19, length 64

03:36:02.036025 IP 192.168.1.10 > 172.16.0.11: ICMP echo request, id 63236, seq 20, length 64

03:36:03.035997 IP 192.168.1.10 > 172.16.0.11: ICMP echo request, id 63236, seq 21, length 64

03:36:04.035992 IP 192.168.1.10 > 172.16.0.11: ICMP echo request, id 63236, seq 22, length 64

03:36:05.036125 IP 192.168.1.10 > 172.16.0.11: ICMP echo request, id 63236, seq 23, length 64

03:36:06.036007 IP 192.168.1.10 > 172.16.0.11: ICMP echo request, id 63236, seq 24, length 64

03:36:07.035814 IP 192.168.1.10 > 172.16.0.11: ICMP echo request, id 63236, seq 25, length 64

To prevent communication between the vlans, add some more rules to the firewall.

iptables -I FORWARD -i eth0.101 -o eth0.100 -j DROP

iptables -I FORWARD -i eth0.100 -o eth0.101 -j DROP

Another ping test and tcpdump confirms traffic is not making it between vlans. The production and management network are isolated from each other as intended.